The Implications Of The EU's AI Act and The Future.

European Commissioner for Internal Market, Thierry Breton

Last week, the European Union passed its EU Act, a piece of legislation that is designed to regulate artificial intelligence. The Act aims to establish safeguards for EU citizens while enabling growth for businesses, something that is a fine line.

The impact of this piece of legislation though, won’t just be felt within this trading bloc, because, like with GDPR, it will also shape how companies outside have to shape AI if their generative tools are to be used by EU citizens.

With this Act,what the EU has done is seized the lead in terms of regulations and started a global discussion that countries and trading blocs around the world will either agree or disagree with.

But the journey to this piece of legislation started decades ago and is based on the values that the EU was founded on.

What is the EU proposing?

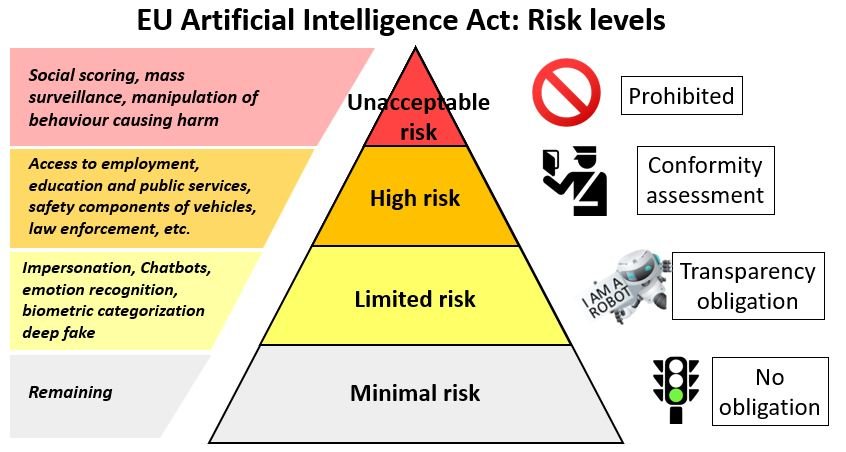

The EU is using a risk-based system that categorises based on identifiable risk posed. The higher the risk the stricter the regulation with a focus being on safety and the rights of the individual. For example biometric, social scoring and the like will be banned.

Transparency requirements will force those building AI models to disclose certain types of information to ensure compliance with other laws are met.

The Acts gives the EU the power for algorithmic audits and the ability to issue fines.

Where did the journey towards this deal really start?

The journey started during the early Internet days, between the 1980s and 1990s, when data was limited and primarily text-based. The focus at that time was more on connectivity and less on data accumulation.

However, during the dot-com boom of the late 90s and early 2000s, companies scaled their adoption of the Internet as a channel for commerce and communication.

During this period and over the years, search engine companies like Google and social media companies like Facebook, Twitter and YouTube grew. It was all about data capture so that advertising offers could be developed to finance growth.

In the early 2010s, the concept of cloud computing and Big Data, characterised by the 3 Vs: Volume, Velocity, and Variety, took hold. Companies began collecting and analysing vast amounts of data for insights.

The volume of data allowed the development of machine learning, particularly deep learning, which made significant advances in gaining insight from large datasets. Neural networks trained these large datasets and began to achieve impressive results in tasks like image and speech recognition.

Generative models, such as GANs (Generative Adversarial Networks), also started to emerge, capable of creating realistic images, texts, and other data forms.

Today we find ourselves at a stage where Generative AI models, like GPT (Generative Pre-trained Transformer), Bard, Claude and others, have become more sophisticated, benefiting from the accumulated vast datasets and improved algorithms.

These models have begun to find applications in various fields, from content creation to scientific research, driven by their ability to generate realistic and coherent outputs.

The pace of change in AI is incredible, leading companies and regulators to focus on the ethical implications, data privacy, and the development of more advanced, context-aware systems.

How much data are we producing today?

We are producing vast amounts of data, which is enabling the exponential growth of AI and generative AI.

On average, each person is producing approximately 1.7 megabytes (MB) of data per day or 620MB per year, a figure that by 2025 could grow to 1.5GB annually or 27 terabytes per year per person by 2025.

Today, this data comes from our internet browsing, use of mobile applications, digital purchases, social media posts, location data from smartphones, and more. However, those who are more digitally immersed and active online could be generating several times more data than the average.

Of course, in the early days of the internet, we were creating mostly text-based communication and information, which was shared among small networks of researchers and academics. AI was a mere concept.

During the 1990s, as more people started to use the internet and early search engines, we still created little data per person, maybe a few megabytes of emails, documents, and browsing history per year.

The rise of social media during the 2010s changed that as platforms encourage billions of people to share massive amounts of personal data - photos, videos, messages, interests, and behavioural data. This allowed for data generation to explode, with, on average, users creating multiple gigabytes per year.

As Big Data and AI grew, growth produces a dataset so huge that AI begins to unlock new capabilities. An average person might generate tens of gigabytes per year now with all their online activity.

Billions of people contributing leads to total global datasets in the order of exabytes and zettabytes. However, many of these datasets are siloed, so the work is being done to gather data together to help generative AI better.

But these datasets have issues around them, especially from a legal and data privacy point of view. Issues that the EU Act, as well as others that follow will look to resolve.

The issues are also around the factual content of these datasets and biases that algorithms can gather from not so much misinformation but data that is wrong and not verified. These issues can influence many specific tactical and strategic points, which impact reputation and trust.

Why are countries creating regulations for AI and generative AI?

Simple, regulation is about safety, security and business growth. But, when it comes to growth, it is also about gaining an early competitive advantage.

For example, being a leader in AI can significantly boost a country’s economic growth:

Innovation and New Industries: AI leadership fosters innovation, leading to the creation of new industries and job opportunities. It also enhances existing industries through improved efficiency and innovation

Attracting Investment: Countries and trading blocs leading in AI are magnets for investments from multinational corporations and startups focusing on cutting-edge technology

Enhancing Public Services: AI can revolutionise public services, making them more efficient and accessible, thus improving overall quality of life and economic stability

International Influence: Leadership in AI technology grants a country or trading bloc significant influence in shaping global digital policies and practices.

Singapore recently announced its own AI strategy, which is 'no longer seen as a good to have, but a must-have.' They are focusing on

Building a robust talent pool

Fostering an AI-friendly ecosystem

Encouraging public-private partnerships.

Singapore and its Singapore Economic Development Board (EDB) excel are looking strategically and across silos when it comes to strategy and designing delivery that delivers growth.

For The UK, after the recent AI Safety Summit, AI is central to it's focus on growth, with the remit held by the Department for Science, Innovation and Technology (DSIT). Yet, to secure growth, especially given the pace at which other markets are moving, what is needed is a cross-government approach.

The US, meanwhile, is going down a different route, with states taking the lead over federal regulation, even though the US President issued an Executive Order 'on safe, secure and trustworthy AI and a blueprint for an AI Bill of Rights.'

What is next for AI and generative AI?

The next phase in generative AI is expected to focus on several areas, including:

Ethical AI and Responsible Data Use: As data generation grows, so does the need for ethical considerations in AI training and usage, including privacy concerns and bias mitigation.

Context-Aware and Personalised AI: Future AI systems are expected to be more nuanced in understanding context, leading to highly personalised and accurate content generation.

Integration with Emerging Technologies: The combination of AI with other technologies like augmented reality, quantum computing, and the Internet of Things (IoT) will likely open new frontiers in data generation and utilisation.

Sustainable AI: With the increasing awareness of the environmental impact of data centres and computing resources, there will be a push towards more energy-efficient and sustainable AI practices. Chip makers like Arm are designing their chipsets with a focus on decarbonizing compute.

What risks exist in this AI race?

Countries efforts to lead in AI and generative AI, which can deliver business growth, could be undermined by:

Insufficient Investment in Research and Development: AI and generative AI are rapidly evolving fields requiring substantial investment in research and development. Failure to allocate enough resources could hinder a country's ability to stay at the forefront of AI advancements.

Talent Shortage: A lack of skilled professionals in AI and related fields can be a significant barrier. This includes not only AI researchers and developers but also a workforce capable of understanding and working with AI technologies. This issue can only be resolved through investment in education, which many countries have been leading for years.

Inadequate Infrastructure: AI technologies, particularly data-intensive ones like generative AI, require robust and modern digital infrastructures, including high-speed internet and powerful computing resources. Inadequate infrastructure can limit the development and deployment of AI solutions. Countries like the Saudi Arabia and the UAE have been doing deals to, for example, but Nvidia chips to power their AI ambitions. AI companies based in the UAE are themselved making the call on what side they will be.

Data Privacy and Security Concerns: As AI systems rely heavily on data, concerns about privacy and data security can impede their adoption. Failure to establish strong data protection laws and ethical guidelines can lead to public distrust and reluctance in embracing AI technologies.

Regulatory Hurdles: Overly stringent or unclear regulatory frameworks can slow down AI innovation and adoption. Countries need to find a balance between regulating AI to ensure safety and ethics without stifling innovation. The EU and UK are leaning towards a more structured and holistic legal framework, while the US shows a more fragmented approach with some movement towards federal legislation. China's governance model, on the other hand, seems to be evolving in response to both internal and external factors, balancing innovation with societal control. All these strategies are shaping the AI world we live in, creating AI bubbles.

Public Perception and Resistance to Change: Resistance to technological change among the public and within organisations can slow down AI adoption. Misunderstandings and fears about AI, often stemming from a lack of awareness, can lead to resistance. The narrative is about the negative, the risk to jobs, rather than the value added and the opportunities AI and generative AI can deliver. Perception matters as it leads to trust. Reputation matters.

Global Competition: The race for AI leadership is a global one. Intense competition from other countries, which may have more resources or a more aggressive approach, can undermine a nation's efforts to lead in AI. AI is everywhere, it crosses siloes, which is why those that are responsible for policy need to looks strategically and outside their usual boundaries.

Intellectual Property Challenges: Issues surrounding IP rights in AI can lead to legal battles and uncertainties, potentially slowing down innovation and collaboration in the field. The question here is, what data are the LLMs using to deliver generative AI solutions? is it open source data or copyrighted with IP? Are data owners rewarded for helping to build generative AI solutions?

Ethical and Societal Impacts: Concerns about the ethical implications of AI, such as job displacement, bias in AI algorithms, misinformation and the societal impact of autonomous systems, need to be addressed comprehensively.

Are the generative AI models that we are building for a global world or a Western world?

Ask yourself this question, who is building ChatGPT, Claude, Bard and all the other GPT and generative AI services? Where are they based? What is their primary language? What are their culture and societal norms?

Outside of the tech bubble, people live in communities, even hyperlocal environments where language accents and cultural norms help or hinder understanding.

Cultural issues play a significant role in how AI and generative AI learn and offer support, particularly concerning the diversity of written and spoken languages. These issues can impact the effectiveness, accessibility, and fairness of AI systems:

Language Bias in Training Data: Most AI models, especially generative ones, are trained on datasets predominantly in English or other major languages. This can lead to biases where the AI performs better in these languages and struggles with less common languages, potentially excluding non-English speakers or speakers of less common languages from fully benefiting from AI technologies.

Cultural Context and Nuances: Language is deeply intertwined with culture. AI models that lack exposure to diverse cultural contexts may misinterpret or misrepresent nuances, idioms, and cultural references. This can lead to misunderstandings or inappropriate responses, particularly in sensitive areas like mental health support or customer service.

Ethical and Societal Norms: Different cultures have varying ethical and societal norms, which can impact how AI is perceived and what is considered appropriate or acceptable in AI interactions. For instance, the directness of communication, notions of privacy, or the role of technology in decision-making can vary widely.

Localisation Challenges: Localising AI applications involves more than just translating language; it requires adapting the application to local customs, regulations, and user expectations. This can be a complex task, especially for generative AI models that need to understand and generate contextually appropriate content, but multilingual models that can handle 100+ languages are emerging to provide more global accessibility and applicability in various locales. That said, models optimized for single languages tend to show higher fidelity of generation. So diversity combined with specificity are both growing.

Representation in AI Development: The underrepresentation of certain cultures and languages in the AI field can lead to a lack of understanding and consideration of these perspectives in AI development. This can result in AI systems that do not fully cater to the needs of diverse global populations.

Accessibility Issues: The dominance of certain languages in AI can create accessibility barriers for non-native speakers or those with limited proficiency in these languages, thereby exacerbating digital divides.

Algorithmic Cultural Bias: AI algorithms can inadvertently learn and perpetuate cultural biases present in their training data, leading to unfair or biased decision-making processes that might disadvantage certain cultural groups.

To address these challenges, it is important for AI developers and researchers to focus on creating more inclusive and diverse datasets, incorporating multicultural and multilingual perspectives in AI development, and ensuring that AI systems are tested and adapted for different cultural contexts. Additionally, involving linguists, anthropologists, and other cultural experts in the AI development process can help in understanding and integrating cultural nuances more effectively.

We are living and working in an era where language is no longer just that; it is data, but when building generative AI tools, we need to consider local context to ensure the future we are building is for all and is safe.

We are in an era of change and opportunity. But also one of risk, where we need to be mindful of and ensure that perception and reputation matter if we are to see the return of value and growth from AI.

A recent study by PWC stated that AI innovations could contribute up to $15.7 trillion to the global economy by 2030! With the right ethical governance frameworks in place, generative AI may usher in a new era of inclusive and sustainable growth.

But before you see anywhere near these numbers, generative AI and other AI tools will require invest from, primarily, VCs, CVCs and others.

Make no mistake, for value and safety, companies will have to support their in-house or arms-length investment vehicles in order to identify how best they can find efficiency.

Venture capitalists have generally led the way in the past in supporting innovation, maybe, the time is now for corporate VCs.

The opportunities and value that generative AI can deliver need to be designed into the safety-net that citizens expect.

In an era of uncertanty, technology and innovation can deliver growth.